Someone is DDoS-ing my Azure Functions, and what to do

This post is over a year old, some of this information may be out of date.

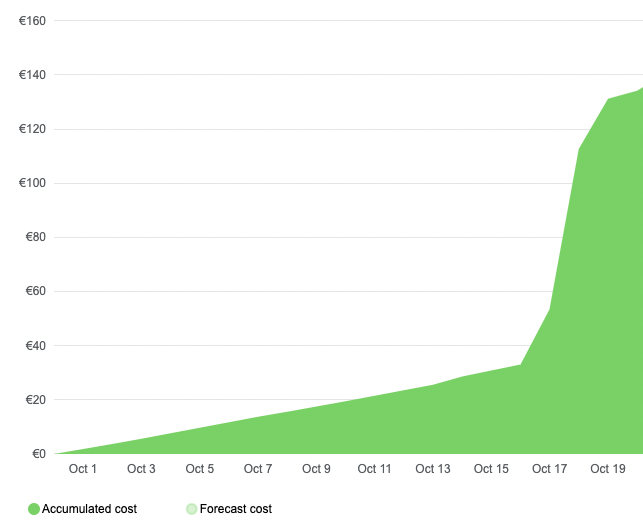

Last week, all of a sudden, my Azure subscription got suspended. As the subscription runs on credits and is optimized to be way below the limit, I was surprised to see that I suddenly reached the spending limit.

Usually, the monthly cost for the subscription is around 50-60 EUR. This month, it suddenly got above 150 EUR, but why?

Looking into cost management, I saw a cost spike in the Log Analytics service that Application Insights uses. Typically, this service costs me 10 EUR per month, but now, all of a sudden, it was above 90 EUR.

As this service is part of the Visitor Badge (visitorbadge.io) service I provide, I dug a bit deeper to see what was going on.

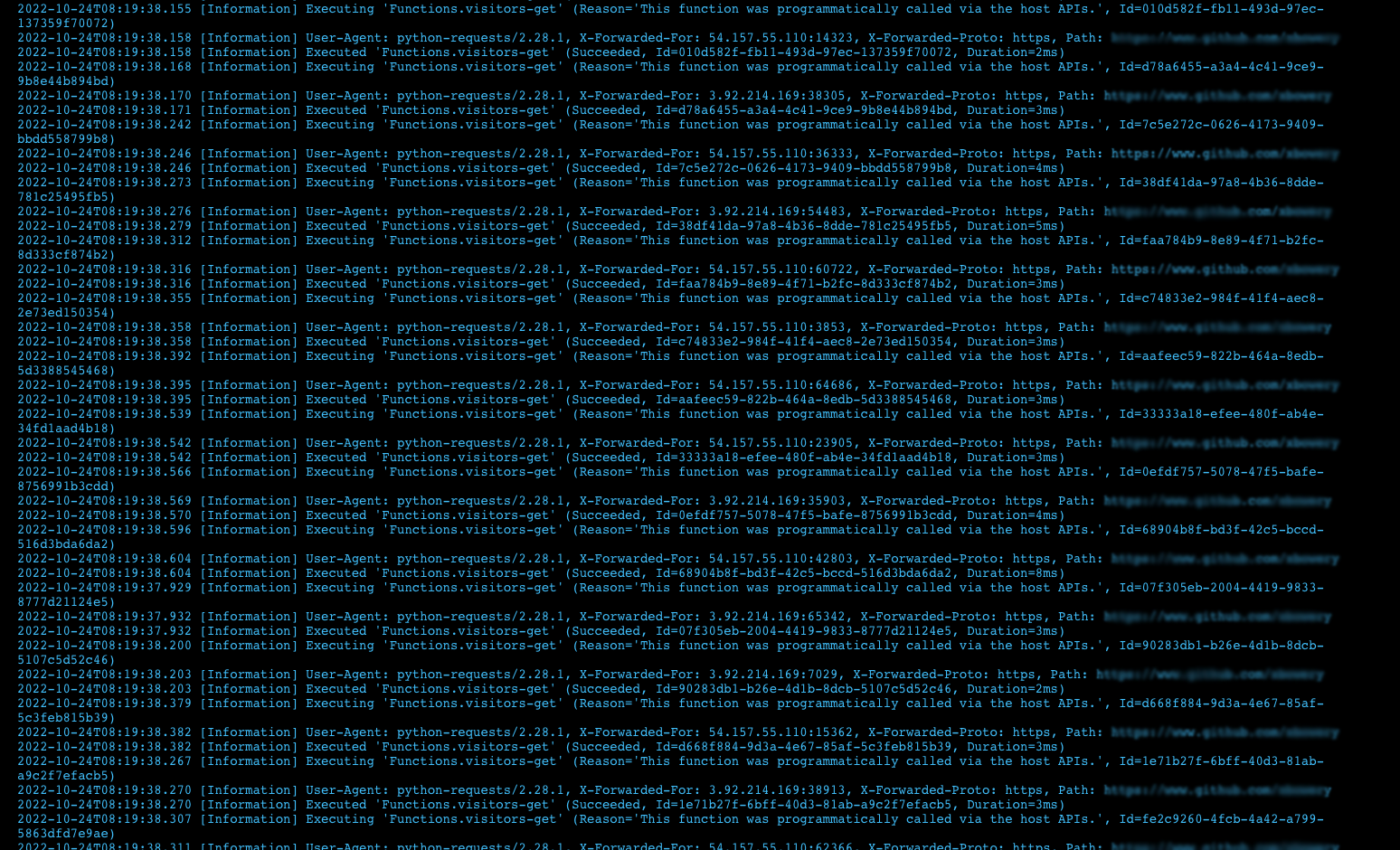

The Visitor Badge service consists of two parts, the website, and the API which provides the badges. The API runs on an Azure Functions consumption plan. The price of this Azure Functions plan went up due to the execution time.

Investigating what was going on

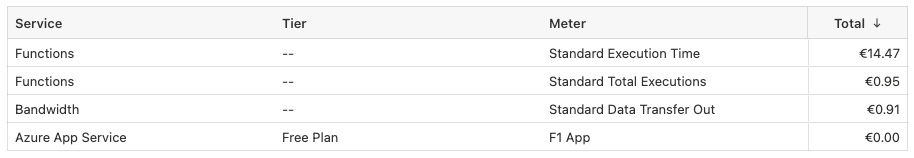

After checking the costs, I went to Application Insights to see the live metrics of the Azure Functions. When the metrics view loaded, I noticed there were a lot of servers/hosts running. It must have been above 20. I just remembered that I had to screenshot it at that moment.

A quick restart of the Azure Function service led to stopping these servers/hosts, but quickly +10 hosts started up again, and immediately the requests were coming in.

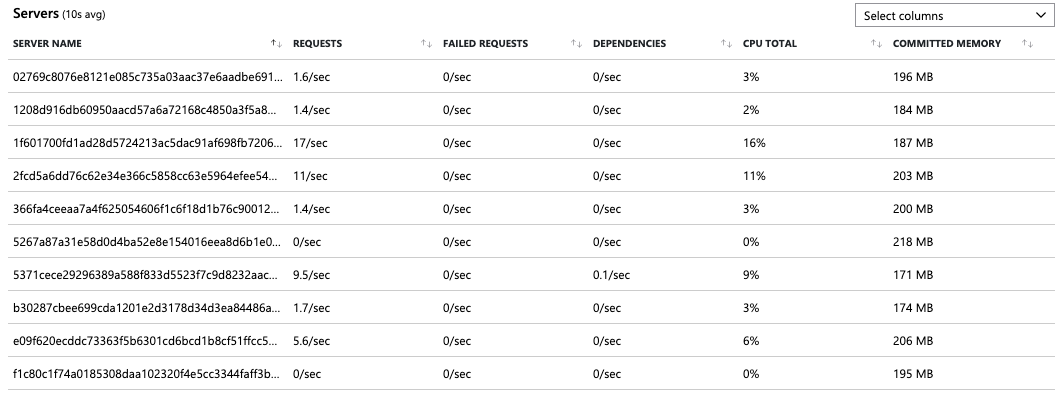

At that moment, I knew that something was spamming the API. The log output was even more fun, as it was impossible to follow.

Checking the function execution

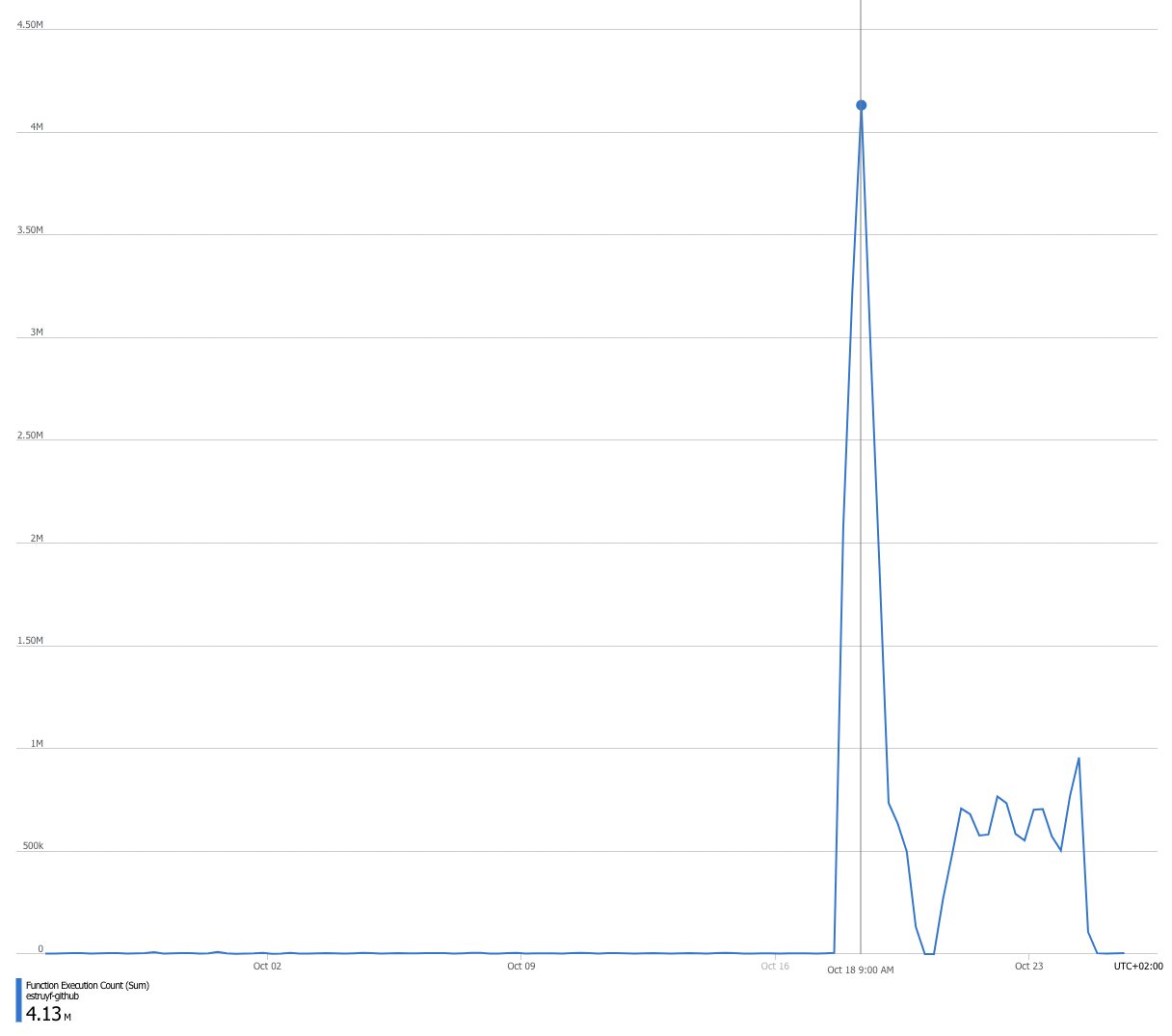

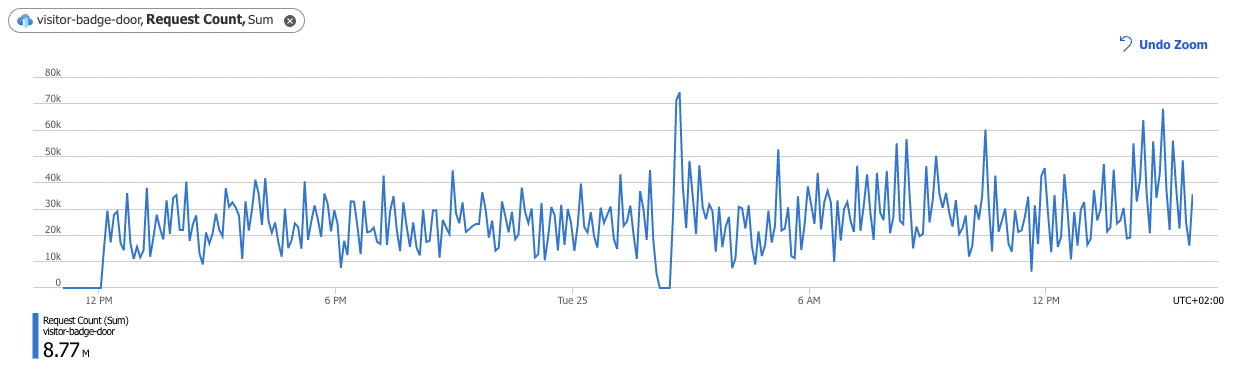

Opening the metrics in Application Insights showed that on the 16th of October, something started to call the API a lot. At some point, it was even 4 million executions.

That increased the cost of my Azure Functions and Log Analytics services.

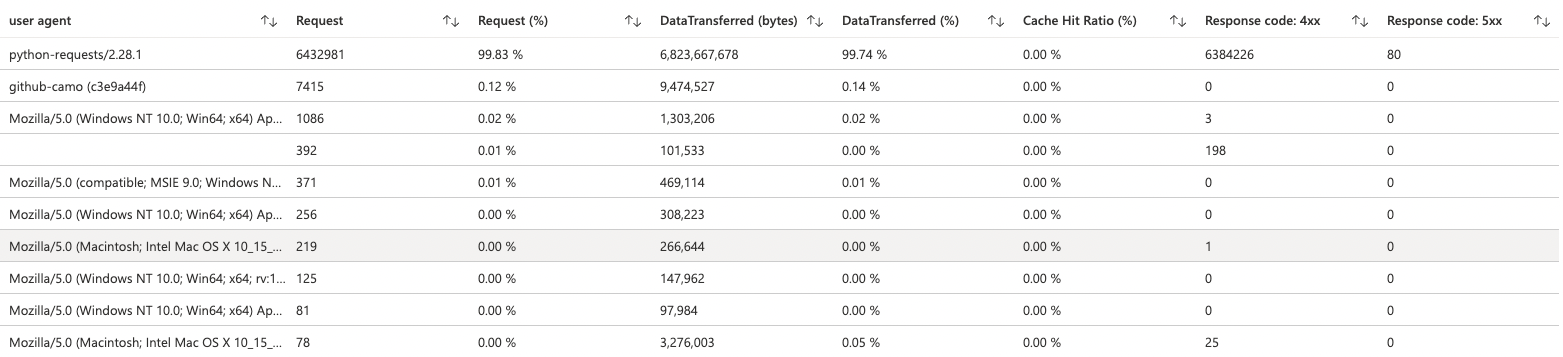

Digging into the logs to see what was causing it

The log screenshot above showed that a person configured a job on Amazon hosts to call the API with a Python script. All to increase the number of visitors on their GitHub profile.

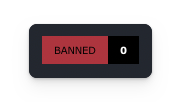

The first thing I implemented on the API was a way to ban specific paths/users. It is the first time it has happened since I launched the service, but apparently, people still like to fake their stats, unfortunately. If this happens again, the badges will now show up as follows.

Still, the problem with the Python script DDoSing my Azure Function API, went on, so I had to find a better solution for it.

Opening a new door

Initially, I thought about blocking the IPs from which the calls were requested, but as they were coming from Amazon services, I didn’t want to block any valid IPs.

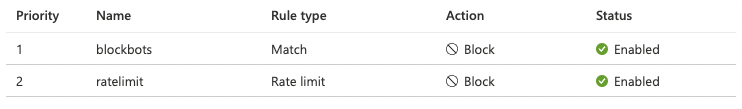

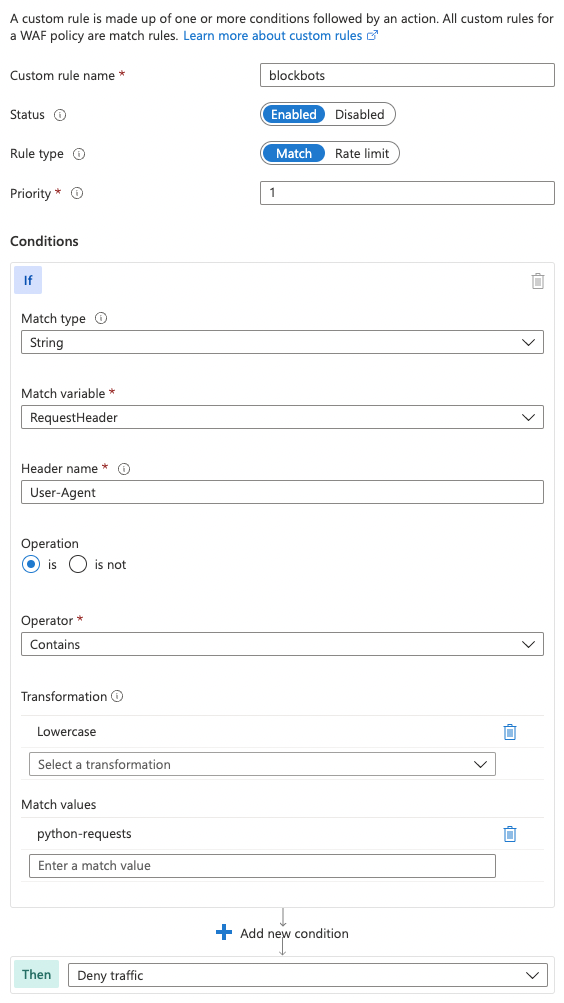

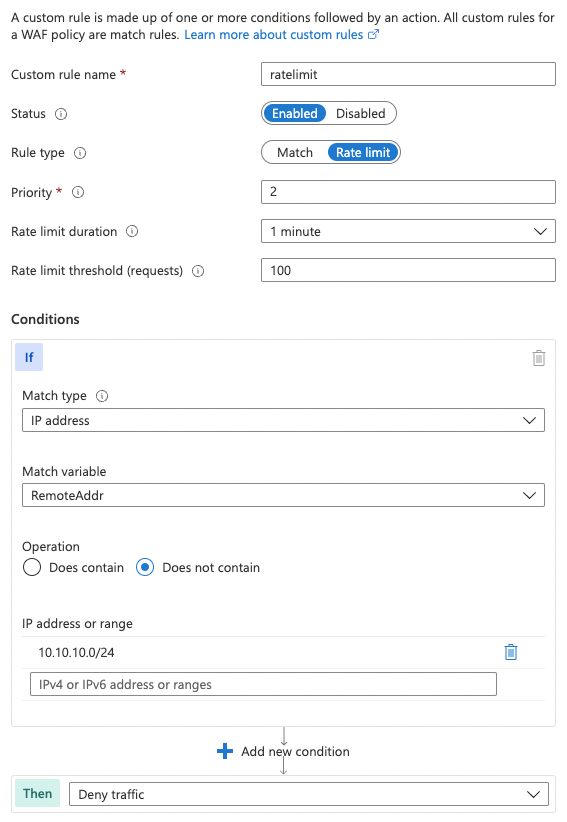

Solving the problem within the Azure Function service or code would not be a solution, so I eventually went for Azure Front Door with a couple of Web Application Firewall rules.

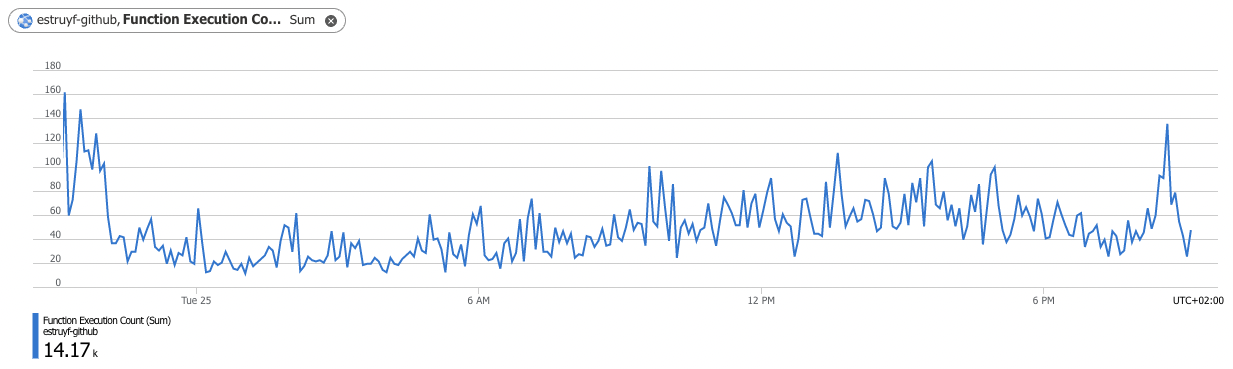

With these two rules in place, the number of Azure Function executions decreased drastically as right now, a couple of user agents get blocked, and other requests are rate limited.

Here, the Python requests automatically get a 4xx code and will not hit the Azure Function.

In a graph, it looks as follows:

Now on the Azure Functions side, things look a lot better:

The rule configuration

The rule to block specific user agents looks as follows:

The rule to rate limit by IPs looks like this:

Here are a couple of helpful articles I used to configure the rules:

- Configure a WAF rate limit rule

- Implement IP-based rate limiting in Azure Front Door

- Configure an IP restriction rule with a Web Application Firewall for Azure Front Door

Hopefully, the Visitor Badge will run smoothly again.

Related articles

Report issues or make changes on GitHub

Found a typo or issue in this article? Visit the GitHub repository to make changes or submit a bug report.

Comments

Let's build together

Manage content in VS Code

Present from VS Code